Consensus Among Experts

The agreement is striking. Sam Altman speaks of “a couple of thousand days” until AGI — roughly 3,500 days. Demis Hassabis says 3–5 years. A former OpenAI developer believes it could be just two years. Even Elon Musk, not exactly known for understatement, estimates 3–6 years.

These estimates may sound optimistic, but the data tells a clear story. In the largest survey of AI researchers to date, over 2,700 scientists collectively estimated a 10% chance that AI systems will surpass humans in most tasks by 2027.

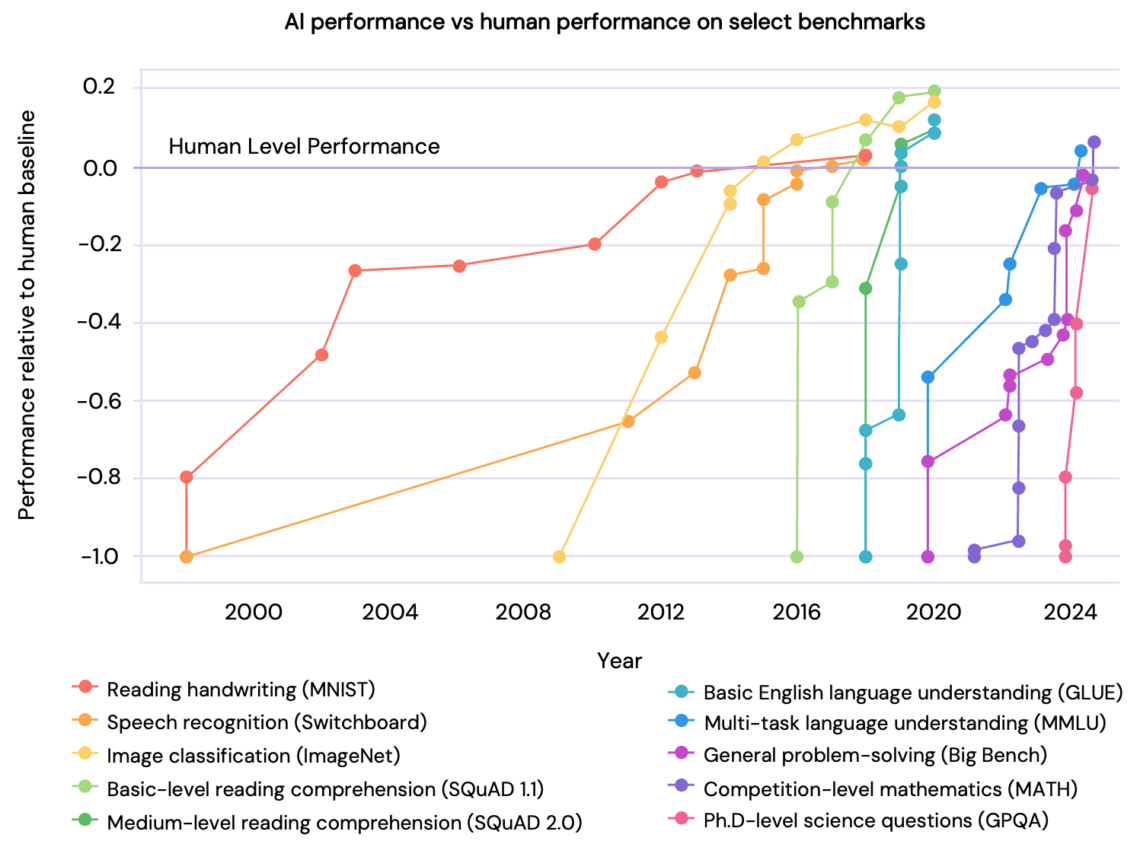

Performance Increases Are Measurable

The International AI Safety Report 2025, led by Yoshua Bengio, powerfully illustrates how AI systems are surpassing “human-level performance” in more and more disciplines. The development cycles are becoming shorter. What used to take decades now happens in years or even months.

Especially revealing: progress follows a predictable pattern. Once an area is digitized and transformed into training data, it’s only a matter of time and compute power before AI systems surpass human experts.

The Compute Equation

Our paper analyzes the critical role of compute. Current estimates suggest that the human brain has roughly 1,000× more neurons than today’s LLMs have neuron equivalents. For synapses, the factor is around 100.

To achieve a 1,000× increase in compute, the authors of “AI 2027” calculate that by 2027, individual projects may use hundreds of millions of high-end GPUs. That sounds massive — but not impossible if exponential compute growth continues.

The energy demands, however, would be enormous: 70 gigawatts, compared to the 30 watts of the human brain. That’s the equivalent of half a dozen large power plants. This reveals physical and economic limits.

The Data Question

Another limit could be the availability of training data. The entire internet has already been used for current LLMs. New sources must be tapped or generated artificially.

But here too, researchers show remarkable creativity. Synthetic data, simulations, and novel data sources continue to expand the training pool. Yann LeCun points out that a four-year-old child processes about the same amount of visual data as LLMs extract from the internet. The room for expansion is still vast.

Investments Without Precedent

The financial resources are flowing at an unprecedented scale. The Stargate project alone is planning $500 billion over four years. Tech giants are investing tens of billions in data centers. Meta plans to spend between $60 and $65 billion in 2025.

These investments are not hype, but calculated bets on a foreseeable technological revolution. Companies consider this development so certain that they’re willing to risk massive sums.

The Architecture Debate

One open question remains: Is scaling existing architectures enough? Sam Altman is convinced that OpenAI knows what is needed. Demis Hassabis sees a 50% chance that no further breakthroughs like the transformer architecture will be required.

Yann LeCun strongly disagrees. For him, today’s LLMs are fundamentally limited. But even if new architectures are needed, history shows that breakthroughs often arrive sooner than expected. From the perceptron to the transformer took decades — yet development is accelerating exponentially.

Historical Parallels

Our paper reminds us that technological forecasts have often been too conservative. In 2017, Max Tegmark published Life 3.0, where experts predicted AGI in 10–30 years at the earliest, with many estimating 100 years or never. Just eight years later, those same experts now speak of 2–5 years.

The shift in timeframes is dramatic: what was considered “this century” in 2017 is now expected within the next few years. This acceleration follows a well-known pattern of technological revolutions.

Critical Factors

In summary, our research identifies three key factors for the timeline:

- Compute scaling: With Moore’s Law and specialized AI chips, a 1,000× increase by 2030 is plausible

- Data availability: New sources and synthetic data continuously expand training possibilities

- Algorithmic advances: Even without revolutionary breakthroughs, architectures continue to improve

All three factors are progressing in parallel and reinforcing each other. This doesn’t make 2027 a certainty — but a realistic possibility we must prepare for.

Conclusion: Time Is Running Out

The analysis shows that the projected timelines are not science fiction. They are based on measurable trends, massive investments, and the consensus of leading experts. Whether AGI arrives in 2027 or 2030 is almost secondary. What matters is that we prepare now for this future.

The question is no longer whether AGI is coming — but how we ensure it is developed for the benefit of humanity. The time to set the right course is running out.

At SemanticEdge, we are closely monitoring these developments. As a pioneer of conversational AI, we understand both the potential and the risks of advanced AI systems. SemanticEdge stands for secure and transparent conversational AI through the interplay of generative AI with a second, expressive, rule-based intelligence that minimizes the risk of hallucinations and alignment faking. Subscribe to our newsletter for further analyses from our research paper.